From Hydroxychloroquine to AI: What a Failed COVID Treatment Taught Me About Digital Health Misinformation

September 19, 2025

Five years after documenting the rise and fall of hydroxychloroquine, I'm seeing the same dangerous patterns in how AI health innovations spread—and why the stakes for equity have never been higher.

From Hydroxychloroquine to AI: What a Failed COVID Treatment Taught Me About Digital Health Misinformation

The trajectory of hydroxychloroquine during COVID-19: from promising treatment to cautionary tale

The trajectory of hydroxychloroquine during COVID-19: from promising treatment to cautionary tale

In early 2021, I published a paper with an oddly prescient title: "The Rise and Fall of Hydroxychloroquine for the Treatment and Prevention of COVID-19." At the time, I thought I was simply documenting a cautionary tale about how politics can derail science. What I didn't realize was that I was actually mapping the blueprint for how misinformation spreads in our hyper-connected, algorithmically-driven world—a blueprint that's now playing out again with artificial intelligence in healthcare.

The Pattern I Didn't See Coming

The hydroxychloroquine story followed a depressingly familiar arc: promising laboratory results, breathless media coverage, political endorsement, massive research investment, gradual evidence of ineffectiveness, and ultimately, widespread disillusionment. What made it unique wasn't the pattern itself—medical history is littered with promising treatments that didn't pan out. What made it extraordinary was the speed and scale at which it unfolded.

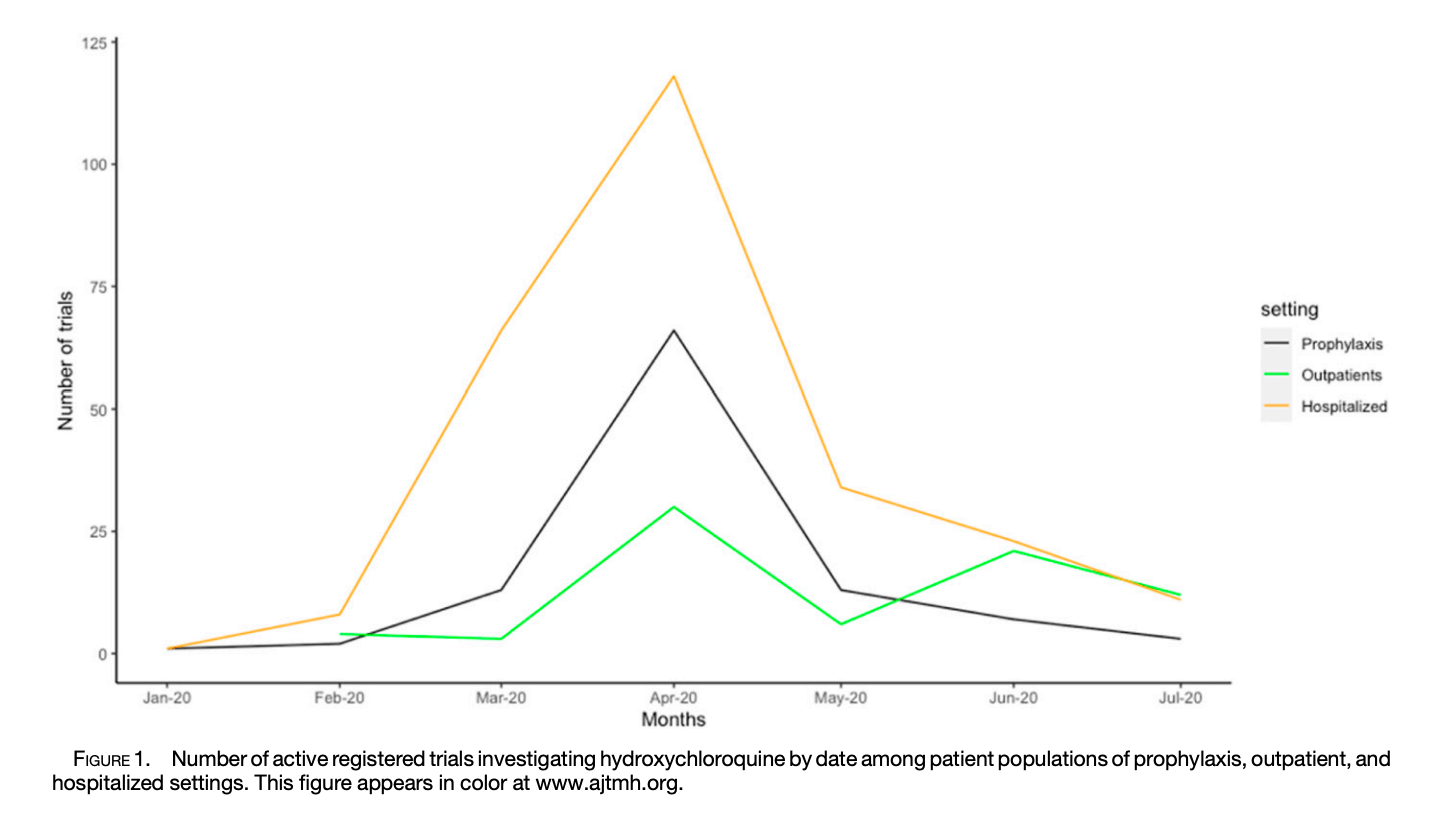

Within weeks of the first small French study, over 300 clinical trials were registered globally. Emergency use authorizations were issued. Political leaders were promoting it on television. Social media was amplifying every positive anecdote while algorithms buried contradictory evidence. The traditional gatekeepers of medical knowledge—peer review, professional societies, regulatory agencies—were simply overwhelmed by the velocity of information and misinformation.

As I documented the numbers behind this phenomenon, one statistic haunted me: of the 341 trials registered to evaluate hydroxychloroquine, fewer than half ever recruited a single patient. The media frenzy had created the appearance of a massive scientific enterprise while the actual science was fragmenting into underpowered studies that could never provide definitive answers.

The AI Echo

Fast forward to 2025, and I'm witnessing something eerily familiar in how artificial intelligence innovations are being promoted, adopted, and implemented in healthcare systems worldwide. The same accelerated timeline, the same breathless coverage, the same mixture of legitimate promise and dangerous hype.

Consider the parallels:

Laboratory Promise: Just as hydroxychloroquine showed antiviral activity in test tubes, AI algorithms demonstrate impressive performance in carefully controlled research settings. Both represent genuine scientific advances that work under specific conditions.

Media Amplification: Early positive results get amplified through digital channels that reward novelty and impact over nuance. Success stories spread faster than methodological concerns or equity considerations.

Implementation Pressure: Health systems feel compelled to adopt AI solutions not because of rigorous evidence, but because of competitive pressure and fear of being left behind—the same dynamics that drove hydroxychloroquine prescribing.

Fragmented Evidence: Rather than large, coordinated evaluations of AI's real-world effectiveness, we're seeing hundreds of small pilot studies that can never definitively answer questions about equity, safety, or long-term impact.

What the HCQ Story Taught Me About Digital Equity

But there's a crucial difference that makes the AI situation potentially more consequential than hydroxychloroquine ever was. HCQ was a single drug that either worked or didn't—and when it didn't work, we could stop using it and move on. AI systems, once embedded in healthcare delivery, become part of the infrastructure. Their biases become institutionalized. Their blind spots become systematic gaps in care.

The hydroxychloroquine experience taught me to pay attention not just to what gets studied, but to what gets left out. In our analysis, we found that the vast majority of trials focused on hospitalized patients in well-resourced settings. Rural communities, marginalized populations, and low-income countries were largely absent from the research agenda. When the evidence finally showed HCQ didn't work, we at least had evidence—but only for certain populations.

With AI in healthcare, I'm seeing the same pattern of selective attention. The algorithms that get the most investment and research attention are those that serve markets where there's money to be made. The applications that could most benefit underserved communities—like AI-assisted diagnosis in resource-constrained settings—receive less attention, smaller studies, and less rigorous evaluation.

The Speed Problem

Perhaps most importantly, the hydroxychloroquine saga revealed how digital communication can compress the normal timeline of scientific consensus-building in ways that disadvantage equity. In the traditional model, promising treatments undergo years of careful testing that allows time to identify differential effects across populations. The digital acceleration of both hype and research means we're making implementation decisions before we understand how innovations perform in different contexts.

This speed problem is even more acute with AI because the technology itself enables rapid scaling. A biased algorithm can be deployed across thousands of clinical sites faster than researchers can identify and document its differential impacts. By the time we recognize that an AI system works brilliantly for urban, insured patients but poorly for rural or marginalized communities, it may already be deeply embedded in clinical workflows.

Learning From Our Mistakes

The hydroxychloroquine story ended with a clear scientific consensus: the drug doesn't work for COVID-19. But the larger story it tells about how evidence gets generated, communicated, and translated into policy in our digital age is far from over. That story is now playing out with AI in healthcare, and this time the stakes for equity are even higher.

The question isn't whether AI will transform healthcare—it already is. The question is whether we can learn from the hydroxychloroquine experience to ensure that transformation serves justice rather than amplifying existing disparities. That means:

- Demanding equity considerations in AI development from the start, not as an afterthought

- Insisting on representative datasets and inclusive research designs

- Building mechanisms for ongoing monitoring of differential impacts

- Creating space for communities to participate in defining how AI serves their needs

- Resisting the pressure to implement before we understand

The hydroxychloroquine saga taught me that in our hyper-connected world, the absence of evidence can quickly become distorted into evidence of absence. With AI in healthcare, we can't afford to repeat that mistake. The communities most likely to be harmed by biased AI systems are also the least likely to be included in the studies that evaluate them.

This time, we have to do better. The blueprint is already written in the hydroxychloroquine story—we just need to pay attention to its lessons.

Reflections on a Professional Arc

Looking back, I realize that documenting the hydroxychloroquine phenomenon was my first real encounter with how digital technologies can amplify both innovation and inequity simultaneously. What started as a straightforward analysis of clinical trial data became a window into the deeper dynamics of how evidence gets created and contested in our networked age.

That realization has shaped everything I've done since—from leading the TOGETHER trial consortium to my current focus on AI and global health partnerships. The hydroxychloroquine story taught me that the most important equity questions aren't just about who benefits from health innovations, but about who gets included in defining what counts as evidence in the first place.

In many ways, this is the through-line of my career: understanding how power dynamics shape whose voices get heard, whose experiences get measured, and whose needs get prioritized as health systems become increasingly digitized. The hydroxychloroquine paper was just the beginning of that story.

The research publication that documented the hydroxychloroquine phenomenon and its lessons for digital health misinformation

The research publication that documented the hydroxychloroquine phenomenon and its lessons for digital health misinformation

This piece reflects on "The Rise and Fall of Hydroxychloroquine for the Treatment and Prevention of COVID-19" published in the American Journal of Tropical Medicine and Hygiene, 2021.